Azure SQL Database’s point in time restore and long term retention are solid backup options, of which you’d have every reasonable expectation for a PaaS service!

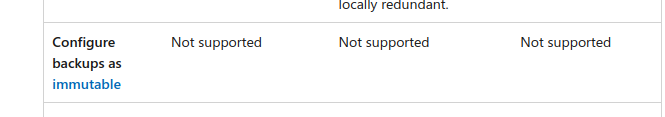

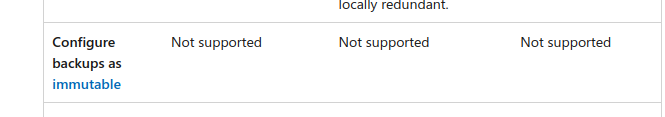

However, Microsoft’s documentation is abundantly clear that, at the time of writing, there is no support for immutable backups via this method.

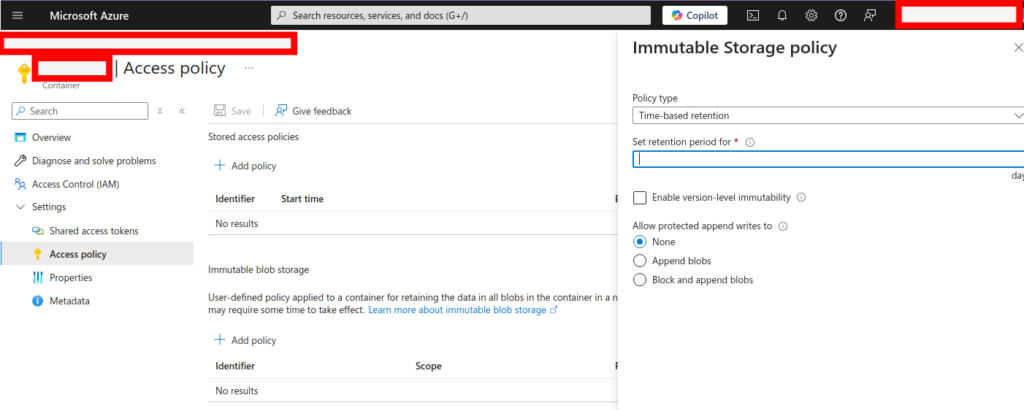

If you actually need to achieve immutable backup storage for Azure SQL database, you’ll need a different approach.

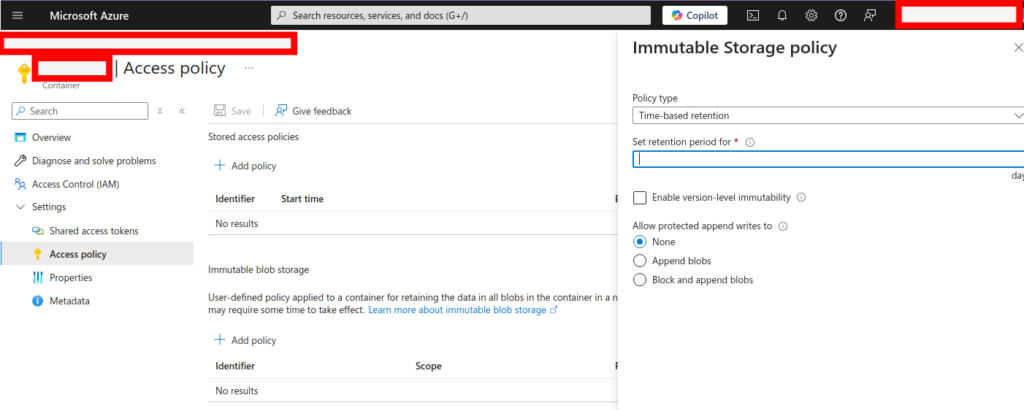

The Export button within Azure SQL Database can be used to export a .bacpac file. If this is stored in a storage account with immutability locked, you have a copy of your data that will be resilient, even to a Global Administrator compromise.

With regard to .bacpac exports, Microsoft helpfully reminds us that:

BACPACs are not intended to be used for backup and restore operations. Azure automatically creates backups for every user database. For details, see business continuity overview and Automated backups in Azure SQL Database or Automated backups in Azure SQL Managed Instance.

However, that leads me right back to “immutability is not supported” point regarding the backups they’re mentioning here. It seems remarkable that “business continuity” is mentioned in the context of backups that are very vulnerable in many BCP scenarios, given the world of ransomware we face today (and will face in the future!)

A .bacpac file held in immutable storage can be imported back into a new Azure SQL database to restore it, but it’s important to note Microsoft’s warning:

For an export to be transactionally consistent, you must ensure either that no write activity is occurring during the export, or that you’re exporting from a transactionally consistent copy of your database.

This is indeed critical. A copy can be made simply with the Copy button within Azure SQL Database. Once complete, press Export on the copy of the database. You can delete the copied database once the export is complete.

The truncated error message I received (and the reason for this blog post) when trying to import a .bacpac that was not transactionally consistent is as follows:

The ImportExport operation with Request Id failed due to 'Could not import package. Warning SQL72012: The object [data_0] exists in the target, but it will not be dropped even though you selected the 'Generate drop statements for objects that are in the target database but that are not in the source' check box. Warning SQL72012: The object [log] exists in the target, but it will not be dropped even though you selected the 'Generate drop statements for objects that are in the target database but that are not in the source' check box. Error SQL72014: Framework Mi'.

If you see this, you’ll need to export a copy of the database, as above, so that no transactions are occurring on that database copy for the duration of the export operation.