If you have at some point enabled Microsoft’s Cloud Update in the Microsoft 365 Apps admin center, and later want to update a device managed in this way to Current Channel (Preview) or the beta channel, you may find yourself tearing your hair out wondering why a separate Group Policy/Intune/registry based instruction to switch to a different channel doesn’t work immediately. Or perhaps why you can’t quickly take a machine out of cloud update control, or indeed deploy the preview channels via the cloud update mechanism!

To be fair, Cloud Update taking precedence and causing other policies to be ignore is documented, although how to override this if you do need to wrestle control back from the Cloud Updates is buried a little in Microsoft’s document.

I had a test machine that was enrolled in the Cloud Update, but I now needed to be on Current Channel (Preview), so I couldn’t have it be controlled by Cloud Update anymore, as these preview channels aren’t an option in that portal. The test machine was added to the scope of an Intune Configuration Profile which set the update channel via Administrative Templates.

A simple check for updates in any Office app happily informed me I was already up to date. 🙁

I could get the device on the beta channel by running officesetup.exe /configure <xml>, with an XML file as follows:

<Configuration>

<Updates Channel="BetaChannel" />

</Configuration>

However, this wouldn’t stick – the next Office update would roll it right back to Current Channel.

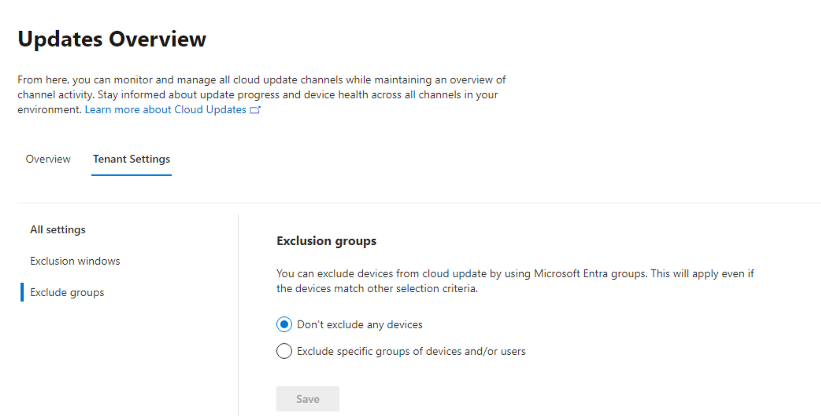

I excluded an Entra ID group containing the primary user and the device from Cloud Update, but this didn’t help immediately. I didn’t have 24 hours available to me to wait for the exclusion to take effect.

In Tenant Settings, you can exclude a group from cloud update management, but it may take some “cloud time” to apply!

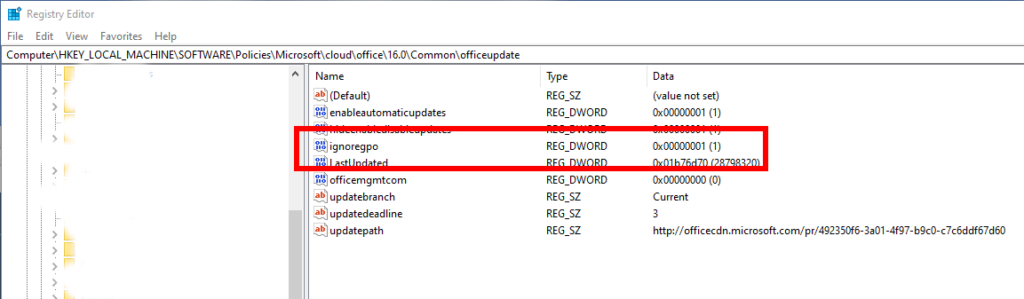

It turns out that once in Cloud Update, if we want to force Cloud Update off without waiting for the machine to drop out of the inventory, we must set this registry value:

HKEY_LOCAL_MACHINE\SOFTWARE\Policies\Microsoft\cloud\office\16.0\Common\officeupdate

Value: ignoregpo, Type: DWORD, Value data: 0

Now, the updates setting from HKEY_LOCAL_MACHINE\SOFTWARE\Policies\Microsoft\office will actually be honoured, and an update check should find the right channel!