Both software quality, and the mechanisms that support its improvement, are critical to the security of people’s personal data.

In education, protecting sensitive personal data is an integral part of safeguarding those for whom we are responsible.

It isn’t good enough to shrug our shoulders if sensitive data about the children in our care could easily be compromised and leaked.

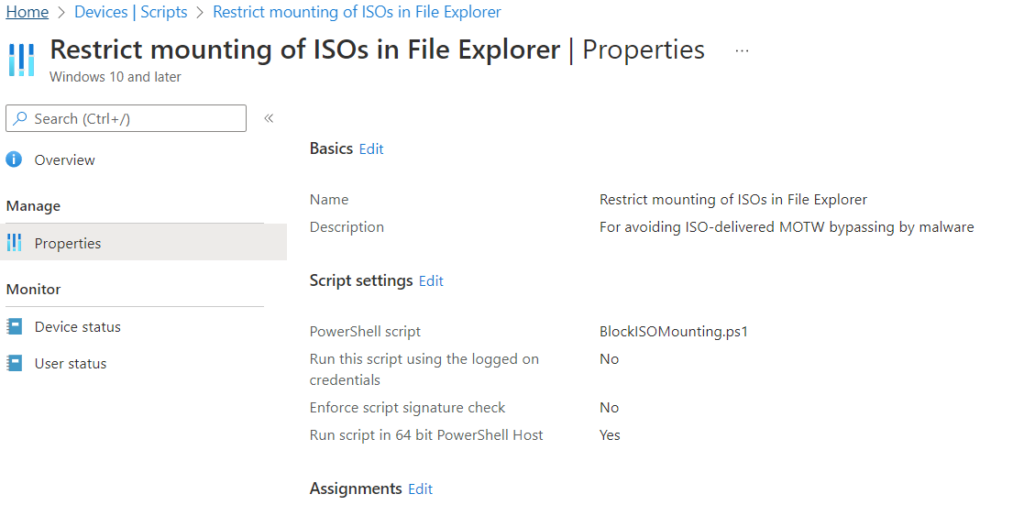

It isn’t good enough to idly preside over a plethora of vulnerable smart things sending who-knows-what to who-knows-where and say we are keeping people safe online.

Unfortunately, we know that software quality in a lot of sectors is… patchy. With the broadest brush strokes, we can separate software into these categories:

- Really great work, made with great care;

- Work that will need ongoing extrinsic motivation to deliver and maintain quality;

- Software that is so badly designed it should not be out there.

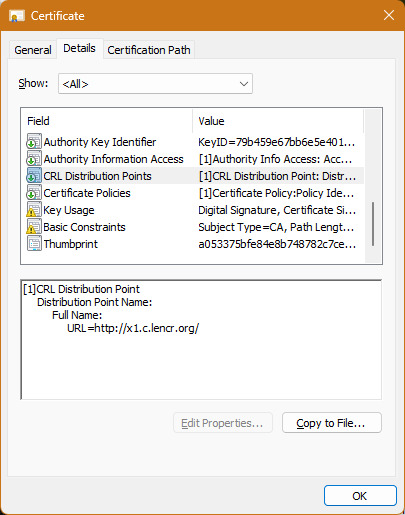

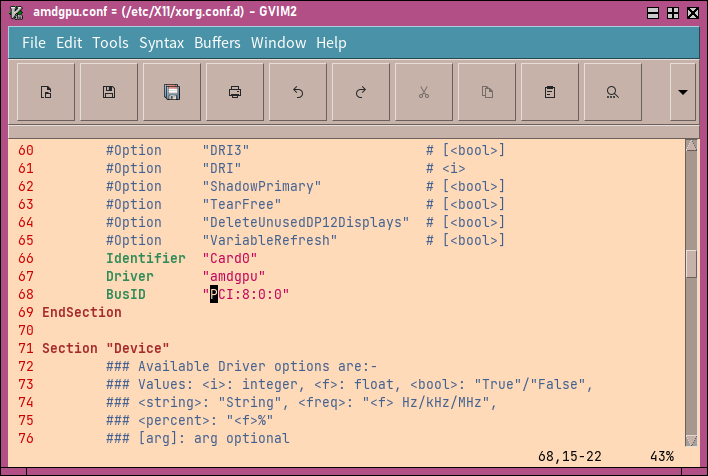

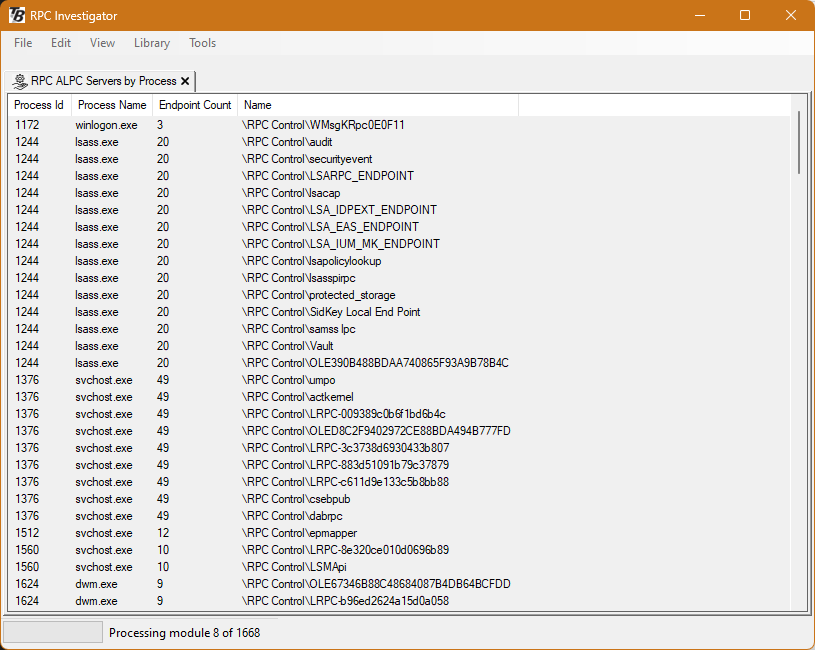

The particular challenge is that it is very difficult, if not impossible, from marketing materials, to determine which category a given product is in and make an informed decision about whether to invest in it or not. Proprietary code, licence agreements that forbid investigating how things actually work, software supply chains that are opaque even to the vendor… It is even less likely you will be successful at that assessment when it’s software as a service, a.k.a “in the cloud”, because you can’t see any of it.

Throughout the software industry, there exists this problem: without regulation and enforcement of professional standards (where are the professional standards?), and because customers can’t accurately assess quality for the reasons I’ve just stated, many get away with delivering inadequate quality. Or, they could and would do the right thing, but don’t have the expertise or the extrinsic motivators that help to identify problems and incentivise improvement. Because competitors aren’t held to a higher standard either, there is a race to the bottom of the barrel for software quality in order to compete.

The best tools today we have to address critical security issues include vulnerability disclosure programmes (bug bounties), actively soliciting the support of others to identify and fix vulnerabilities. Even if a rewards programme isn’t part of the picture, the Enlightened Vendor does have a process and responds appropriately to good-faith security researchers.

However, education is an area that often suffers from a lack of computer security expertise, and certainly doesn’t have enterprise budgets. Today, education vendors generally do not fit into the “Enlightened Vendor” category, because people are not yet asking the questions.

“No-one’s ever asked us that before”… well, a lot of the time people should have asked that before.

Where I see myself fitting into this equation: I would like to be someone who can help drive this improvement in education software. My interest and experience with computer security, and being in the position to influence this as a school IT Manager puts me at the crossroads of safeguarding in education and computer security.

So, I will be asking the difficult questions that “no-one has ever asked before”. I will indeed be observing how software actually behaves in practice when trialling software. I will be asking SaaS vendors why they don’t have a vulnerability disclosure policy and making sure they are thinking about emerging threats.

This isn’t going to be particularly easy.

But, if we say we care about keeping those in our care safe from online threats to their safety, growth and development, computer security is an area we should no longer ignore.