As with many other organisations, at my day job we are using the Office 365 service for email, contacts and calendars. There are a few ways to integrate 365 with your local Active Directory, and we have been using Active Directory Federation Services (ADFS) 3.0 for handling authentication: users don’t authenticate on an Office-branded page, but get redirected after entering their email addresses to enter their passwords on a page hosted at our organisation.

We also use the Azure AD Connect tool (formerly called Azure AD Sync, and called something else even before that) to sync the directory with the cloud, but this is only for syncing the directory information — we’re not functionally using password sync, which would allow people to authenticate at Microsoft’s end.

We recently experienced an issue where, suddenly, the endpoints for ADFS 3.0 that handle forms-based sign in (so, using a username and password, rather than Integrated Windows Authentication) were returning a HTTP 503 error. The day before, we had upgraded Azure AD Sync to the new Azure AD Connect, but our understanding was that this shouldn’t have a direct effect on ADFS.

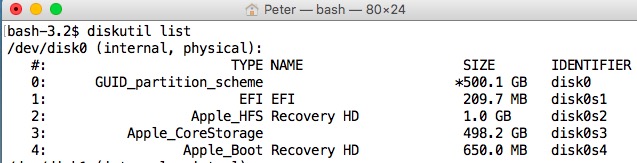

On closer examination of the 503 issue, we would see errors such as this occurring in the AD FS logs:

There are no registered protocol handlers on path /adfs/ls/ to process the incoming request.

The way that the ADFS web service endpoints are exposed is through the HTTP.sys kernel-mode web serving component (yeah, it does sound rather crazy, doesn’t it) built into Windows.

One of the benefits of this rather odd approach is that multiple different HTTP serving applications (IIS, Web Application Proxy, etc.) can bind to the the same port and address, but be accessed via a URL prefix. It refers to these as ‘URL ACLs’.

To cut a very long story short, it emerged eventually that the URL ACLs that bind certain ADFS endpoints to HTTP.sys had become corrupted (perhaps in the process of uninstalling an even older version of Directory Sync). I’m not even sure they were corrupted in the purely technical sense of the word, but they certainly weren’t working right, as the error message above suggests!

Removing and re-adding the URL ACLs in HTTP.sys, granting permissions explicitly to the user account which is running the ‘Active Directory Federation Services’ Windows service allowed the endpoints to function again. Users would see our pretty login page again!

netsh http delete urlacl url=https://+:443/adfs/

netsh http add urlacl url=https://+:443/adfs/ user=DOMAINACCOUNT\thatisrunningadfs

We repeated this process for other endpoints that were not succeeding and restarted the Active Directory Federation Services service.

Hurrah! Users can log in to their email again without having to be on site!

This was quite an interesting problem that had me delving rather deeply into how Windows serves HTTP content!

One of the primary frustrations when addressing this issue was that a lot of the documentation and Q&A online is for the older release of ADFS, rather than for ADFS 3.0! I hope, therefore, that this post might help save some of that frustration for others who run into this problem.

Isn’t it funny that so frequently it comes back to “turn it off, and turn it back on again”? 🙂