Ingesting data from Zscaler Internet Access and Zscaler Private Access into a SIEM is a valuable technique for identifying risky endpoint activity or system compromise. It also gives you a (hopefully) immutable1 copy of this audit data to support a post-incident investigation.

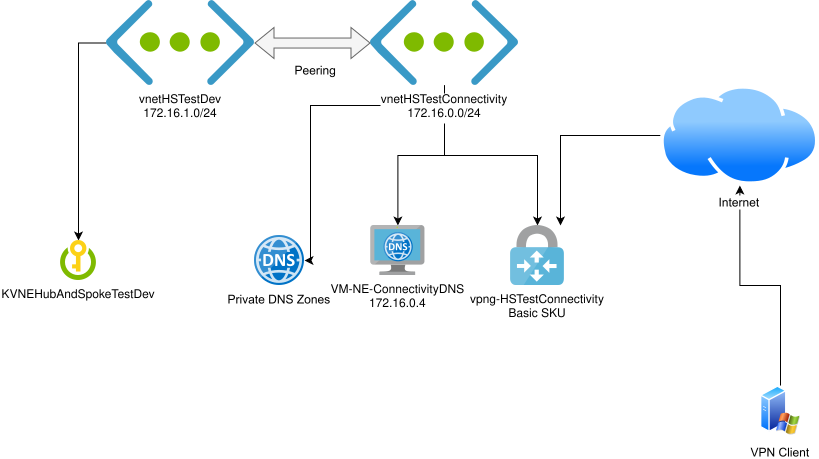

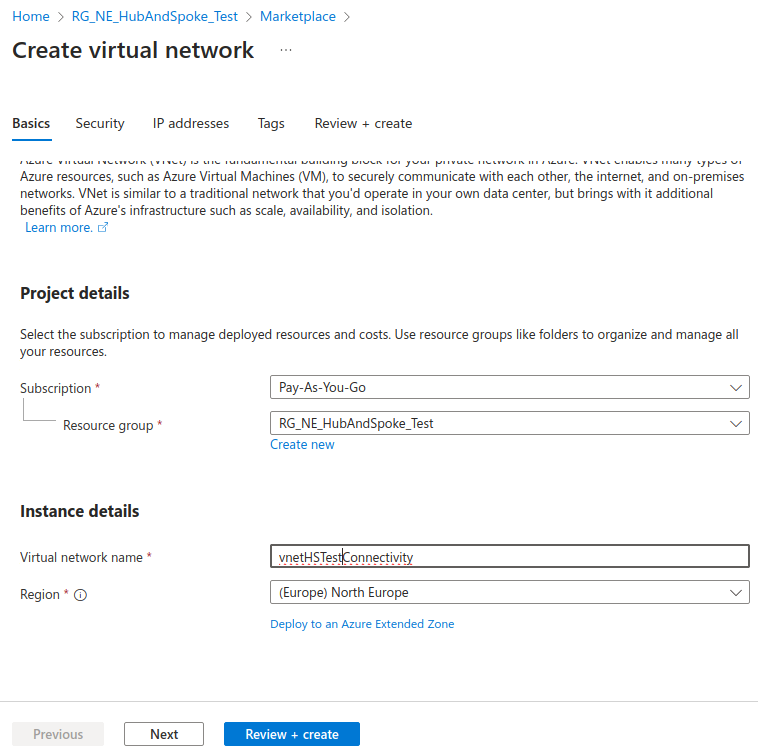

I’ve been able to configure both Zscaler Internet Access and Zscaler Private Access data to be ingested into Microsoft Sentinel2, but occasionally have found that the somewhat circuitous path that ZIA data takes into the SIEM (NSS VM, to another Linux VM over syslog, then to Azure Monitor Agent, and finally to Sentinel) can be brittle. A reboot of the collector VMs has always fixed this, but you have to know that the data flow has stopped!

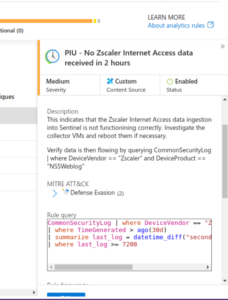

I have written a couple of KQL queries for this scenario – one for Zscaler Internet Access (ZIA) and one for Zscaler Private Access (ZPA).

Each will trigger an incident if zero log entries are received for the relevant service within a 2 hour period.

You can import these to your own Sentinel environment by clicking Import in Analytics rules and providing the ARM template files linked below.

Zscaler Internet Access

Download as ARM template

CommonSecurityLog | where DeviceVendor == "Zscaler" and DeviceProduct == "NSSWeblog"

| where TimeGenerated > ago(30d)

| summarize last_log = datetime_diff("second",now(), max(TimeGenerated))

| where last_log >= 7200

Set this to run against data for the last 2 hours, with the maximum “look back” period. The query will return 0 results if data ingestion is occurring correctly, so you will want to alert on >0 log entries.

Zscaler Private Access

Download as ARM template

ZPAUserActivity

| where LogTimestamp > ago(30d)

| summarize last_log = datetime_diff("second",now(), max(LogTimestamp))

| where last_log >= 7200

Set this to run against data for the last 2 hours, with the maximum “look back” period.

You will get an error “Failed to run the analytics rule query. One of the tables does not exist” if you have not completely configured the ZPA log ingestion, including adding the custom ZPAUserActivity function to your Sentinel workspace. Follow the Zscaler and Microsoft Sentinel Deployment Guide (“Configuring NSS VM-Based Log Ingestion for ZPA”, page 126).

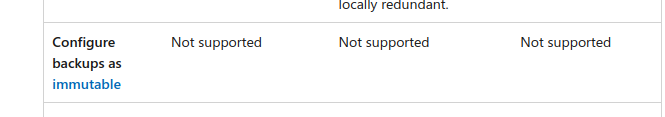

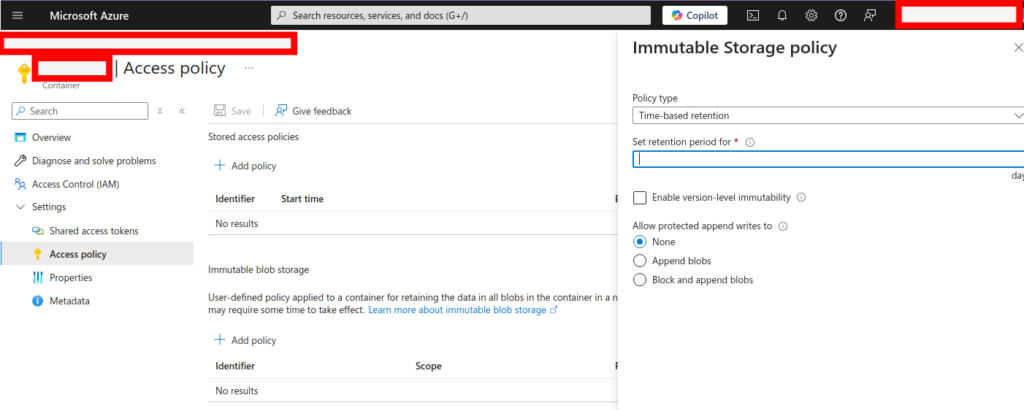

1: The keys to the kingdom are then the Sentinel/LA workspace, which hopefully your attacker has not escalated privileges to be able to delete. There’s nothing like “immutable vaults” in Azure Recovery Vaults for Sentinel or Log Analytics workspaces. You can set a standard Azure lock, but a privileged attacker could just delete the lock!

2: Zscaler recently (just in time) updated the ZPA ingestion workflow to use the Azure Monitor Agent rather than the deprecated Log Analytics Agent. This took a little reconfiguring and was quite an involved process!