A Case of Curious Coincidences…

Put on your citizen’s journalism hats, because we’re about to go on an investigative adventure.

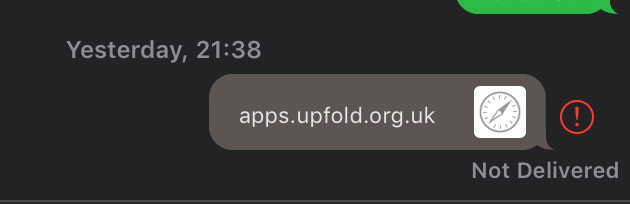

UPDATE: More unsolicited calls recently from 0161 854 1214 where no message is left — unverified as to whom this is at this stage. It seems reasonable to presume that this in the same range of phone numbers, given the MO.

I have received a number of calls from 0161 854 1173 recently (May 2018). A voicemail message is never left, but if you pick up, you always hear a very short extract of hold music before you are connected with a person (automatic dialer, perhaps?) The agent typically identifies themselves as from the “Accident Advice Centre” or “Accident Advice Helpline”.

I have made a number of call recordings of my interactions with this phone number. I stress that these recordings have been made lawfully, as incoming calls to my number automatically play a pre-recorded message “this call is recorded” before the ringing begins, thanks to my service provider. (A recorded message stating that the call is recorded is common practice in that it is similar to the recorded messages that one hears when calling up companies.)

I was of course curious who was calling and how my phone number had been obtained.

Me: “I’m just wondering really where the number that you’ve called came from? I mean, obviously —”

Agent: “Oh, your number, sorry, sir? Your number?”

Me: “Yes. Yeah, yeah, yeah.”

Agent: “Ah, right, I do apologise. I thought I — no — no worries. Yeah, we work off the Accident Portal1, sir. So when you ring your insurance and tell them about your accident, er, it comes under what you would call the Accident Portal. Now [inaudible] organisations that have access to the Accident Portal, OK? The DVLA, the Accident Advice Centre, that’s us, the Motor Insurance Bureau and the DVLA2. Sorry, the [inaudible], the DVLA and your insurers.”

Me: “You’re a government organisation?”

Agent: “We are a [government/governing]3 body, OK? Er, we were set up to make sure your insurance companies are doing their job correctly. Also just to make sure the complaints[?] OK and happy with your insurance [inaudible] and everything, and just to make sure you know, you’ve received all your requirements — your legal, erm, payments and stuff. Now—”

Me: “What I’ve [inaudible] is to find out information about your organisation, because I’ve struggled on a number of occasions to actually find out any more.”

Agent: “Say again, sorry?”

Me: “I’ve struggled on a number of occasions to actually find out any more information about your organisation.”

Agent: “Just bear with me one second, I’m going to get my manager, OK?”

Me: “I tend to start asking these questions and the call seems to be cut off.”

Agent: “Right, well, why did you think your call seems to be getting cut off?”

Me: “I don’t know.”

Agent: “Is this our company you’re talking about, or…?”

Me: “Yeah, calls from this number. The number you are calling from, and, yeah, I seem to ask for information about the organisation and then I don’t seem to — the call doesn’t seem to stay, uh, connected.”

Agent: “Are you just looking at the [inaudible], because that’s just an area code, so it could be a million, um, you know, a million people calling you.”

Me: “— the full phone number”

Agent: “Let me just see who’s called you in the past, give me one second.”

Agent: “Yeah, I can see that our, our, our, my colleague [name] tried contacting you. Erm, but she put it down to answering machine, like, we couldn’t get hold of you, and that’s why it’s come back through today.”

Me: “Yeah, as I said, it’s not the first call. I’ve had a number of calls, but, um…”

Agent: “Right, so are you aware of the compensation that has been set aside for you, Peter? — — Hello?”

Me: “Again, what I’m wondering about is more information about your organisation.”

Agent: “Right, like I said— That’s completely fine, obviously you’re only [inaudible] obviously want to know who we are and make sure we have your best interests at heart. Erm, just bear with me and I’m going to get my manager, she’ll just come over and give you a bit more of an explanation of who we are and why we are calling you, OK?”

Me: “That would be great, thank you.”

1: The closest thing to “Accident Portal” I can find is “Claims Portal Limited”, a “a tool for processing low value personal injury claims”. I have no evidence that this is the “portal” in question, however. Investigations into whether Claims Portal Limited have my data are ongoing. Unfortunately, this company’s website Terms of Use prohibit me from linking to them without prior consent, so you will have to use a search engine yourself. (“You may not provide a link to this web site from any other web site without first obtaining Claims Portal Ltd’s prior written consent.”)

2: It’s credible that there is a database of incidents for legitimate organisations like insurance companies. That data presumably would be processed for the purposes of the prevention and detection of fraud. It certainly would not be permitted to use the data for “leads” for claims management companies, especially if the data subject is not specifically aware of the data processing in the first place.

3: It’s not clear enough to distinguish between these two words in my recording. I did want a clear answer as to whether they were identifying themselves as some kind of official body, or as a for-profit company. We’ll discover more about the identity of the organisation later.

After a brief interlude, the manager spoke with me. I asked for the full organisation name and the registered office.

Manager: “It’s the Accident Advice Helpline, OK?”

Me: “OK, so that’s Helpline. Is that Limited?”

Manager: “It’s just the Accident Advice Helpline. So basically we make the follow-up calls, Peter —”

Me: “— the name of the organisation.”

Manager: “Accident Advice Helpline. Yes?”

Me: “OK, so not Accident Advice Helpline Limited…”

Manager: “Yes. OK? So, basically what we do is we make the follow-up calls to make sure that each and every person that has been involved in a recent road traffic accident or an incident — is being looked after, and offered a 5-star service so they’ve got the courtesy vehicle in place and the car’s in the garage and that you’re happy with the recovery of the vehicle etc. And obviously we explain to them about the payment when it’s a non-fault incident, there’s a payment automatically set aside1 — erm — which is for minor discomfort2, so this payment has got nothing to do with your insurers, it comes from the third party, the fault driver’s insurance—”

Me: “—so the information you’ve received about my number. Where has that come from, please?”

Manager: “That basically is uploaded onto the database. When you pay your premium — and everyone in the UK and Scotland pays their 0.34% of all the premiums put together — creates the Accident Advice Helpline3, so we’re authorised4 to receive all the, basically, the details of incidents and make sure that you’re being offered a good service by your insurers and then obviously, like I said, we ask you about your courtesy vehicle, the recovery of your vehicle, if you’re happy with the services and then obviously if you’re non-fault we explain that you are entitled to a payment, which I believe [original person who called me]’s explained to you already for the minor discomfort — you’ve had your seatbelt on5, someone’s collided into your vehicle and you’ve been involved in a low-velocity impact collision you are automatically entitled to a payment from the third-party6. So, like I say, just to confirm — erm — and to reiterate, this has got nothing to do with your insurers, this is coming from the fault driver’s insurance. Does that make sense?”

Me: “Erm — so, does your organisation have a registered office?”

1: Automatically set aside by whom I wonder?

2: “Minor discomfort”. Remember that justification that has been given for the “payment”, as we’ll be coming back to it later.

3: If I’m understanding the manager correctly, she is stating that the organisation is created from funding from everyone in the UK and Scotland [sic] and, I presume I am meant to believe, that the “automatic payment” comes from those monies. There is no evidence for this.

4: I did not press this point during the call — I was conscious that asking too many awkward questions seems to correlate with early termination of the call — but I’d love to know by whom they were authorised to receive my details. The “database”?

5: Another reference to “minor discomfort”, but then we move away from that and get more specific. Indeed, this is the closest I have come to any suggestion of the type of claim they’d actually want to pursue on my behalf. Mentioning the seat belt suggests they might look to see if a whiplash claim was a possibility if I continued further with them.

6: Automatically entitled? And this is “coming from the fault driver’s insurance”? I don’t accept these statements are true.

I will spare you the details of me explaining that asking for the registered office meant that I wanted a street address!

I eventually got this registered office from the manager:

50-52 Chancery Lane

London

WC2A 1HL

This address appears as the registered office address for an Accident Advice Helpline Limited, company 05121321. This doesn’t exactly match with the manager’s statement that it is “just the Accident Advice Helpline”, but the registered offices are the same.

The last filed accounts with Companies House were on 30th June 2017 and were accounts for a dormant company. I don’t think I can determine if the company is still dormant until they next file accounts in June.

(Coincidentally, Accident Advice Centre Limited (10275785), with registered office 8 Exchange Quay, Salford, United Kingdom, M5 3EJ, was dissolved on 13th June 2017, weeks before those dormant accounts were filed for “…Helpline”. I have a call recording from the same phone number, also from this month, where the agent identifies as from the “Accident Advice Centre” and confirms that this was the address of that organisation — “Yes, that’s our address”. So I’m still not sure who exactly is calling me from this same Manchester phone number — is it “Centre” or “Helpline”?)

I clicked on the name of the first company director for Accident Advice Helpline Limited listed on Companies House, and discovered this individual holds 36 directorships, almost all of them also with correspondence addresses of the London address as above.

Some of the more interesting ones:

I stress that all of the companies above can be reached at the 50-52 Chancery Lane address above.

The list seems rather comprehensive and efficient, in the sense that the whole process of lead generation, claims management, cost assessment and medical assessment for personal injury claims could, in theory, be administered all from this one building.

With all these companies physically located in the same building and with at least one common company director, I wonder how the issue of conflicts of interest is dealt with?

For example, I am sure that a “costs consultants” business would want to act in good faith to (I assume) estimate costs associated with an incident, but with such a close link to legal services firms and claims management firms that may be interested in maximising the assessment of costs… I will say that it raises ethical and procedural questions that I am sure the organisations involved will be happy to answer.

Back to my calls — I take the view that, while they may not want any money directly from me, that this is a marketing activity. They are a private company, trying to generate leads for business for personal injury claims.

My phone number is listed in the UK’s Telephone Preference Service. Let’s look at the legal obligations that this places on organisations:

Direct marketing telephone calls: it is unlawful for someone in business (including charities or other voluntary organisations) to make such a call to any Individual if that Individual has either told that business or organisation that he/she does not want to receive such calls or has registered with the Telephone Preference Service that they do not wish to receive such calls from any business or organisation.

I notified the manager that my number is in the TPS and that, therefore, I took the view that the call was unlawful.

Me: “Given that the number you have called is in in the Telephone Preference Service, um, list of numbers not to call, um, unfortunately the calls you’ve been making are actually unlawful under that relevant legislation1. When you said that this isn’t a marketing call—

Manager: “Oh for goodness sake.”

Me: “I’m sorry?”

Manager: “Do you want me to make a payment? Yes or no?”

Me: “Hello?”

Manager: “I assume no.”

Me: “Are you still there?”

[some confusion — I am asking “are you still there” because I am conscious that the call is likely to end soon]

Manager: “— so you don’t want to move forward with the payment, so I’m going to take you off the system, thank you — [hold music for ~0.5 seconds, then call disconnects]”

1: http://www.legislation.gov.uk/uksi/2003/2426/regulation/21/made

I’m struggling to think of another “helpline” would normally have managers who say “oh for goodness sake” to the people they “help”.

I do hope that she did indeed “take [me] off the system”. I should never have been on there in the first place — and if the company was checking numbers against the TPS list before making marketing calls, as they are legally required to do, this never would have been an issue.

My advice? Do not deal with this organisation, or any with a similar name and similar spiel. Perhaps calmly ask them a few questions about who they are, and see whether their story matches the ones above.

If any relevant official investigatory body wishes to contact me for further details regarding the way this organisation has identified itself, the calling of numbers listed in the TPS, etc., you are most welcome. Your call may be recorded. 🙂